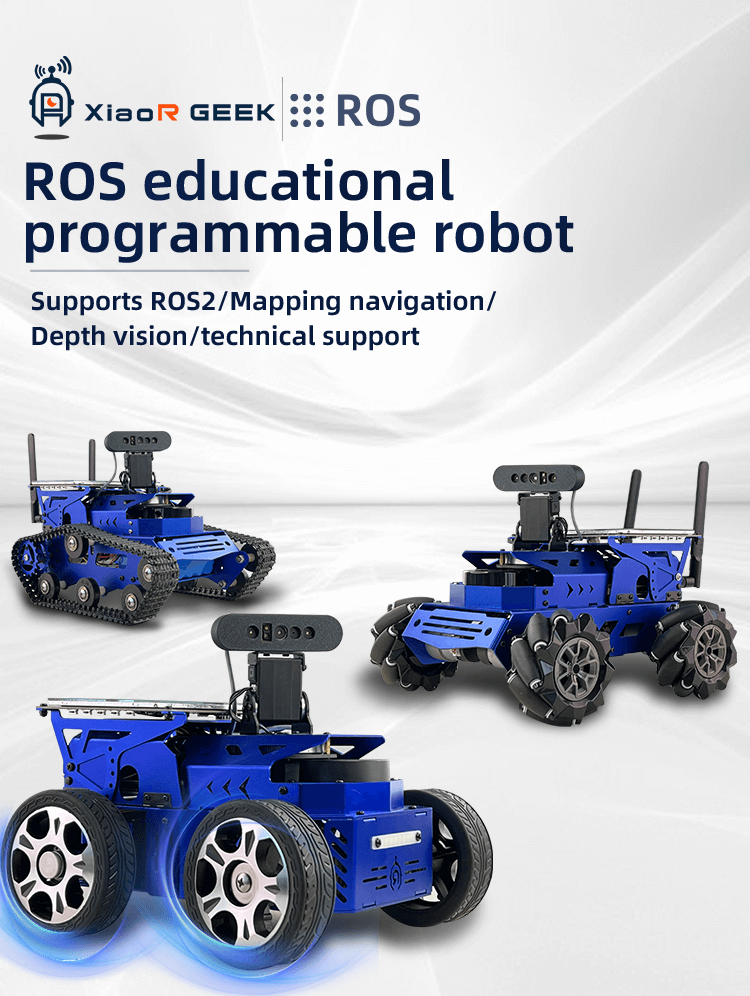

ROS2 Hunter Ackerman educational programmable autonomous navigation smart robot car is designed by XiaoR Technology for in-depth exploration of unmanned driving and robot navigation technology. It is based on NVIDIA Jetson Nano and uses the advanced ROS2 framework. It is a professional-grade autonomous navigation car. The car's chassis adopts an Ackermann steering structure, which is derived from the standard steering system of modern automobiles. It can accurately simulate the guidance mechanism of a real vehicle and is particularly suitable for research scenarios that require a high degree of simulation.

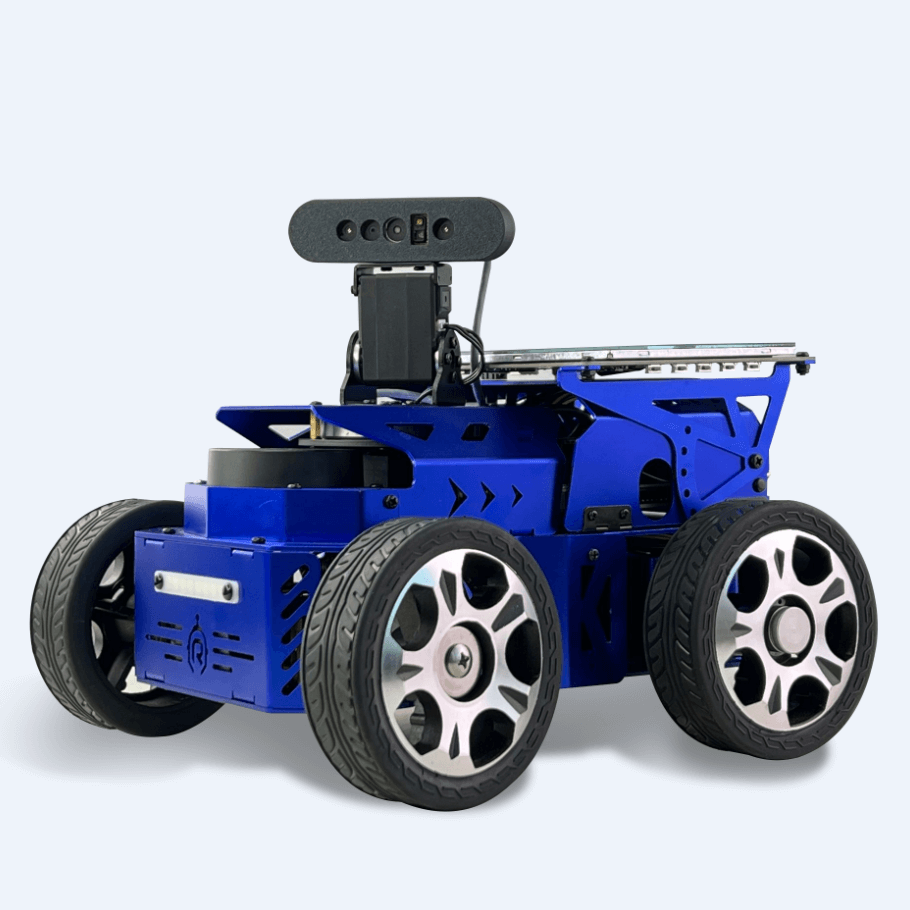

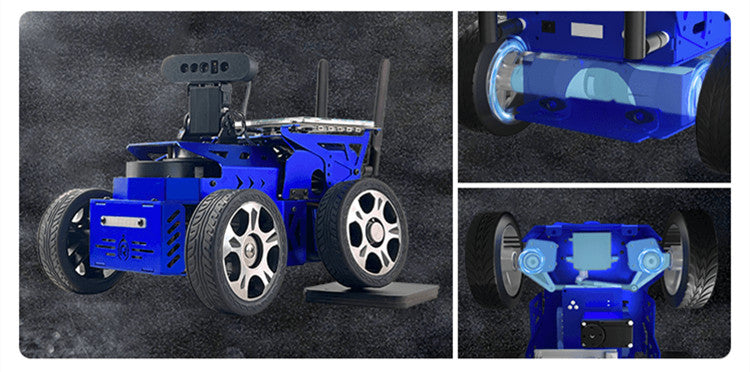

In addition, the robot is equipped with a series of high-performance hardware components, including but not limited to NVIDIA Jetson Nano main control board, high-torque encoding reduction motor, advanced lidar, 3D depth camera, 7-inch LCD screen and programmable cool car lamp. The integration of these components enables the robot to perform complex tasks, such as precise robot motion control, mapping and navigation path planning using the ROS SLAM algorithm, deep learning, and visual interaction.

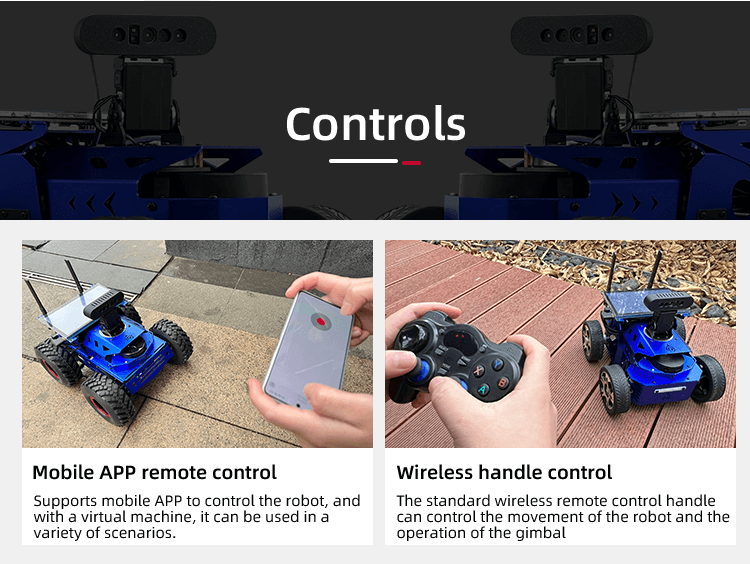

In order to improve the user experience, ROSHunter supports multiple control methods, including mobile APP, virtual machine RVIZ, PS4 controller, etc. Users can easily switch between mapping and navigation modes. The design concept of the robot is to allow users to enter the development process quickly and intuitively. Therefore, it also provides a complete set of ROS courses, covering technical information, functional source code, documents and explanation videos, to help users quickly master the development and application of ROS robots. .

In addition, ROSHunter is equipped with the "ROS human-computer interaction system" independently developed by XiaoR Technology. It can provide real-time feedback on the robot's operating status, program execution (such as mapping, navigation, map saving, etc.) and system information viewing, further enhancing the robot's user interaction capabilities and practicality. This autonomous navigation robot is not only ideal for technology enthusiasts and researchers, but also an excellent tool for teaching advanced technologies in the education field.

Key technologies: C++/Python/ROS2/SLAM autonomous navigation/3D depth vision/autonomous driving

Product Features

Ackermann traveling mode: The car adopts the Ackermann traveling mode. Through the rational design of the steering mechanism and the steering rod/pull rod, and the application of the Ackermann principle, the differential steering of the inner and outer wheels is achieved when the vehicle turns, thereby improving the maneuverability of the robot. and stability.

2 DOF gimbal: The robot is equipped with a high-performance 2 DOF gimbal, which allows the robot to look around and look up in up, down, left and right directions, thereby expanding the robot's rotation range of perspective.

WIFI wireless remote control: The car generates a WiFi signal after it is turned on. A mobile phone or tablet can connect to the car through WiFi and control it with a dedicated APP.

2.4G wireless handle remote control: The car is compatible with 2.4G wireless handle real-time remote control, supports the handle to control the robot, and the control link is stable;

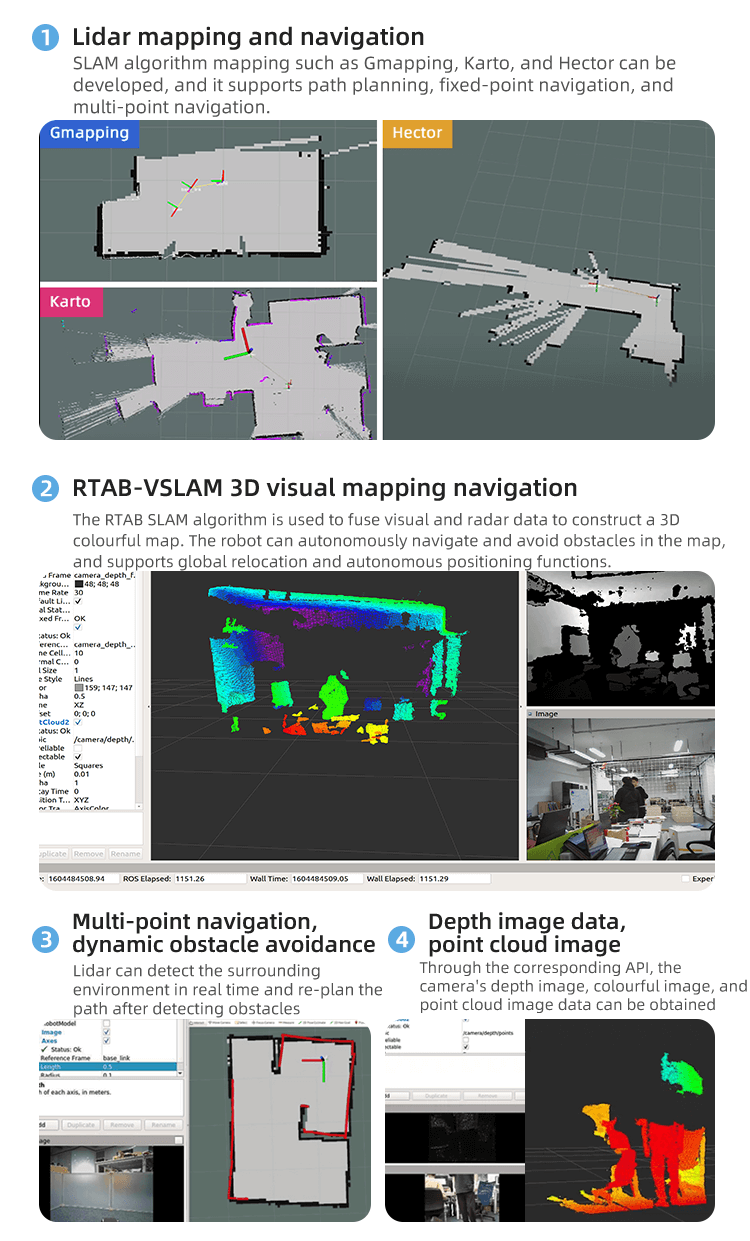

SLAM mapping and navigation: With the XR-ROS human-computer interaction system, SLAM lidar mapping and automatic navigation functions can be realized through APP or virtual machines, and new routes can be automatically planned to avoid obstacles encountered during travel.

3D depth visual mapping and navigation: Use the RTAB SLAM algorithm to combine visual and radar information to build a three-dimensional color map. On this basis, the robot can realize autonomous navigation and obstacle avoidance within the map, and support global relocation and autonomous positioning functions.

Opencv visual line tracking: Through camera machine vision, it can independently judge the black lines on the road and follow the black lines to achieve trace driving.

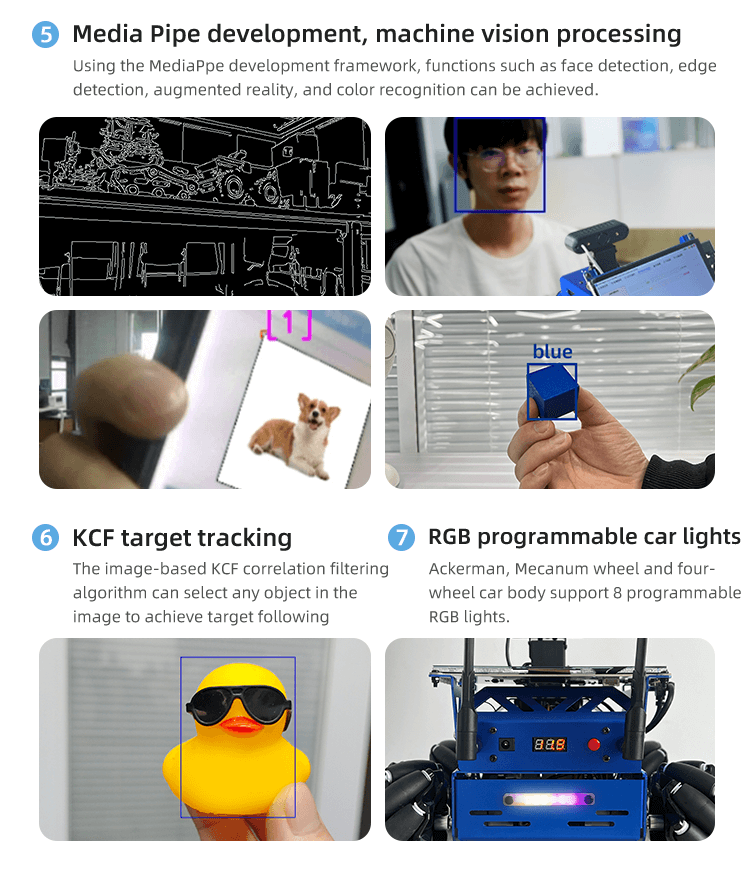

Machine vision processing: Built-in visual development framework, which can realize functions such as face detection, edge detection, augmented reality, and color recognition.